In recent years, artificial intelligence (AI) has made tremendous strides, yet the practical application of these advancements in robotics remains disappointingly restrained. Most industrial robots are designed for highly specialized tasks in controlled environments, such as factories and warehouses. They follow predetermined commands with rigid precision but lack the flexibility to adapt to unexpected scenarios or changes in their environment. The handful of robots capable of visual perception and object manipulation are still significantly hindered by their lack of dexterity and broad physical intelligence. Consequently, the potential for such robots to take on a variety of industrial tasks remains largely untapped.

The Challenge of Everyday Variability

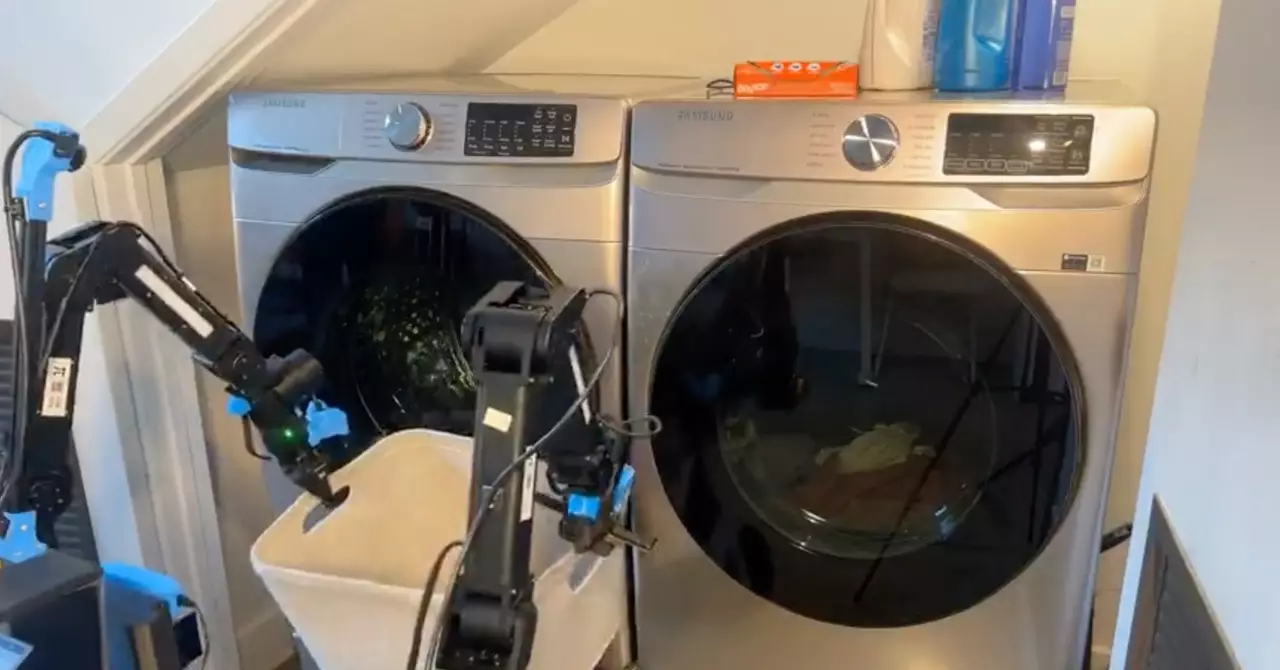

In the complex and ever-changing landscape of human life, the requirement for robots extends beyond simple task performance. To be genuinely useful, robots must learn to handle the unpredictability and disorder inherent to domestic life. The challenges posed by household chores, with their diverse and non-standardized nature, necessitate a new level of agility and adaptability from robots. This calls for innovative approaches that focus on cultivating more generalized skills rather than specialized routines.

Optimism Fueled by AI Advances

Despite these limitations, there is a growing optimism surrounding the future of robotics, particularly spurred on by advancements in AI. High-profile ventures like Elon Musk’s Tesla are diving into the humanoid robot market with the development of Optimus, a robot speculated to become a household fixture by 2040. Priced between $20,000 and $25,000, this ambitious project aims to make a humanoid robot capable of a variety of tasks. However, skepticism remains about whether such a level of functionality is achievable in the timeline proposed.

A breakthrough could lie in the potential of learning transfer — the ability of robots to apply knowledge gained in one task to perform other, distinct tasks. Historically, robotic programming focused on executing individual tasks with tailored machines, limiting the scope of what could be achieved. However, emerging research including Google’s Open X-Embodiment project has made strides in enabling knowledge sharing across multiple robots within various research environments. This represents a significant shift in how robotic learning is envisioned and executed, but considerable challenges still lie ahead.

One of the major hurdles for agile learning in robots is the lack of extensive data reservoirs compared to large language models trained on vast quantities of text. Organizations like Physical Intelligence face the daunting task of creating their own datasets to support their advancements. They are exploring integrated methodologies that combine vision-language models with innovative techniques like diffusion modeling to manifest general learning capabilities. To elevate robots from clumsy automata to versatile assistants, the learning methods must be expanded, refined, and applied at scale.

Looking Forward: The Journey Continues

While the promise of intelligent robots that can seamlessly navigate both industrial and domestic environments is tantalizing, there remains a long road ahead. Experts like Levine highlight that current innovations should be seen as the foundational scaffolding for future developments. The objective is to create robots that can genuinely respond to the dynamic nature of real-world tasks. Continuous investment in research, as well as a focus on creating adaptable learning frameworks, will be essential for transforming today’s limited robotic tools into the versatile helpers of tomorrow.