In the rapidly evolving landscape of artificial intelligence, competition between closed-source and open-source models is intensifying. Among the notable players is DeepSeek, a Chinese AI startup that has made waves with its recent introduction of the ultra-large language model, DeepSeek-V3. With the ambition to democratize AI technologies, the company is not only challenging established giants but is also pushing the boundaries of what open-source models can achieve.

DeepSeek-V3 boasts an impressive architecture that features 671 billion parameters. However, rather than simply relying on the sheer size of the model, its innovative use of a mixture-of-experts (MoE) design allows it to activate only those parameters that are necessary for specific tasks. This targeted activation mechanism is essential for improving both accuracy and efficiency, offering a nuanced alternative to the monolithic structures often employed by competitors. For instance, despite being labeled as an “open-source model,” DeepSeek-V3 has demonstrated performance metrics that rival even the most powerful proprietary systems from industry leaders like Anthropic and OpenAI.

The advancements in architecture follow the successful framework set by DeepSeek’s predecessor, DeepSeek-V2. Both models utilize multi-head latent attention (MLA) combined with the DeepSeekMoE strategy. However, DeepSeek-V3 introduces innovative features like an auxiliary loss-free load-balancing method. This approach dynamically adjusts and balances the workload across different parameters, ensuring a harmonious integration that optimizes performance without degrading efficiency.

One of the critical selling points of DeepSeek-V3 is its commitment to efficiency, not just in computational terms but also in training costs. The model underwent an extensive pre-training regimen using 14.8 trillion high-quality tokens, followed by a nuanced context-length extension. In its dual-stage process, the model’s context length was expanded first to 32,000 tokens and then to an impressive 128,000 tokens. This capability is crucial for handling more complex queries and understanding nuanced prompts.

Moreover, the DualPipe algorithm and FP8 mixed precision training were employed to facilitate this extensive training process while drastically reducing costs. DeepSeek reports that the training process cost approximately $5.57 million—an astounding figure compared to the hundreds of millions typically associated with large language model training. For context, Meta’s Llama-3.1 model reportedly cost upwards of $500 million in developmental investment.

The performance benchmarks released by DeepSeek set a new standard in the realm of open-source AI models. Notably, DeepSeek-V3 excelled in a variety of tests, outperforming other open-source models such as Meta’s Llama-3.1-405B and Qwen 2.5-72B. The performance was particularly pronounced in specialized areas like mathematics and the Chinese language, where DeepSeek-V3 achieved a score of 90.2 on the Math-500 test, significantly outpacing its closest competitor, Qwen.

However, while DeepSeek-V3 holds significant promise, it is not without its challengers. The model faced strong contention from Anthropic’s Claude 3.5 Sonnet, which outperformed DeepSeek-V3 in several benchmarks. Such results imply that while open-source models are catching up, continual advancement is essential for sustaining competitive relevance.

DeepSeek’s advancements signify more than just a competitive model; they highlight a potential shift in the dynamics of the AI ecosystem. By offering a strong open-source alternative, DeepSeek is providing organizations with varied options, enabling them to bypass reliance on singular monopolistic platforms. This diversification fosters innovation and encourages a more equitable distribution of AI benefits, which is crucial in an industry that often faces ethical scrutiny due to concentrated power.

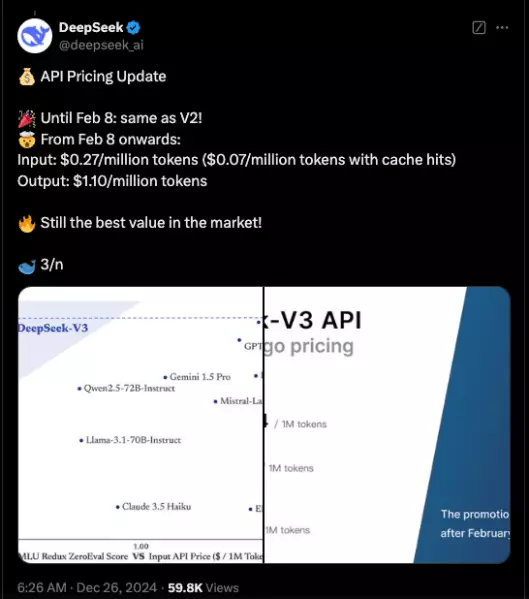

As enterprises begin to explore the capabilities of DeepSeek-V3, they can access the model via Hugging Face or through DeepSeek Chat, a user-friendly interface for interaction with the model. Moreover, the scalable API offered by DeepSeek adds an additional layer of accessibility for commercial uses.

While the journey to artificial general intelligence (AGI) remains challenging, models like DeepSeek-V3 represent significant strides toward achieving this goal. By bolstering open-source initiatives and championing innovative architectures, DeepSeek has set its sights on crafting more intuitive and widely accessible AI systems. The implications of such a shift are vast, ranging from enhancing enterprise efficiency to fostering a competitive arena that benefits users across the globe. As the landscape of AI continues to evolve, the spotlight remains firmly fixed on developments like DeepSeek-V3, which aim to blend boundary-pushing technology with open access.