Recent advancements in artificial intelligence (AI), particularly through large language models (LLMs) like GPT-4, have illuminated intriguing facets of language processing. A distinct phenomenon termed the “Arrow of Time” effect is emerging from research conducted by Professor Clément Hongler from the École Polytechnique Fédérale de Lausanne (EPFL) and Jérémie Wenger from Goldsmiths, University of London. This study delves into the predictive prowess of these models, revealing that they exhibit a marked preference for predicting subsequent words over previous ones in a sentence.

Large language models have revolutionized various domains, providing critical support in text generation, coding, translation, and chatbot interactions. At a fundamental level, these models operate on a predictive mechanism that relies on the context provided by preceding words to forecast the next one. This mechanism, while deceptively simple, forms the backbone of their operational logic, enabling them to engage in coherent and contextually relevant conversations or generate diversified text outputs.

However, understanding the limitations of these models is equally important. When posed with the task of predicting prior words—essentially working in reverse—these models demonstrated a consistent decline in accuracy compared to their predictive capabilities when moving forward. This finding is pivotal, suggesting a significant asymmetry in how language models process information, calling into question long-held assumptions about language structure and our understanding of information processing in AI.

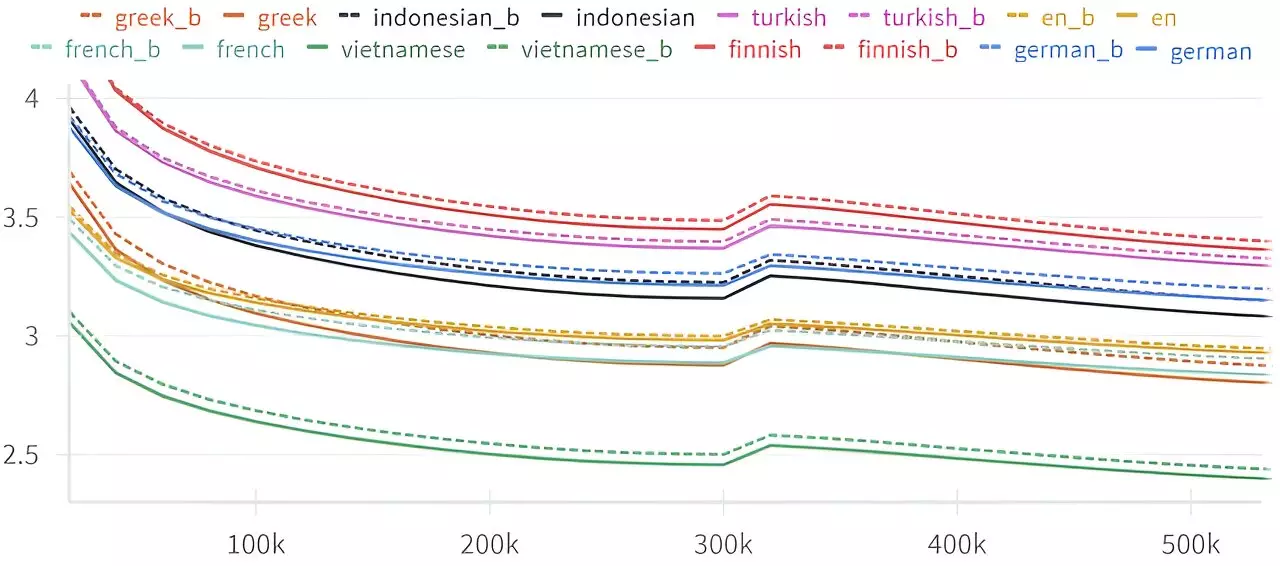

The research conducted by Hongler, Wenger, and machine learning researcher Vassilis Papadopoulos explored LLMs of various architectures, including Generative Pre-trained Transformers (GPT), Gated Recurrent Units (GRU), and Long Short-Term Memory (LSTM) neural networks. With each of these models, the researchers observed the “Arrow of Time” effect, which posits that prediction accuracy diminishes when attempting to forecast backwards. Hongler noted that their findings revealed that LLMs not only exhibit impressive predictive capabilities forward but are consistently a few percentage points less capable when tasked with deriving preceding words.

This observation resonates with historical studies, particularly with the work of Claude Shannon—the father of Information Theory. In his 1951 paper, Shannon contemplated the inherent challenges tied to predicting letters in sequences. While he found minimal differences between forward and backward predictions from a human perspective, the LLMs’ performance discrepancy showcases an underlying complexity that merits deeper investigation.

The research suggests that this backward prediction challenge may be intimately connected to deep-rooted properties of human language, properties that may have gone unnoticed until the advent of large language models. Working on this hypothesis opens doors not just in linguistics but may also provide insights applicable to detecting intelligent behavior in machines or even considering the potential for life. The exploration of how time and causality interplay further emphasizes the significance of this study, where the nature of language could serve as a beacon for understanding broader concepts of intelligence.

Moreover, as researchers such as Hongler continue to investigate these phenomena intricately linked to the passage of time and causation, the revelations could ultimately reshape our comprehension of emergent systems in physics. Exploring how language and intelligence interconnect could yield transformative insights not only in AI development but also in unraveling the mysteries of time itself.

What adds a compelling dimension to this research is its origin, which is entwined with a creative project involving a chatbot capable of engaging in improvisational theater. The initial intent was to enable the bot to create narratives with a predetermined conclusion, essentially allowing it to “speak backwards.” The unexpected outcomes led to a discovery of broader themes linking language, intelligence, and the perception of time.

As Hongler retains enthusiasm for their project, the journey from a theatrical experiment to groundbreaking assertions about language models highlights the intersection of art and science. It emphasizes that experimentation is not merely about narrowing down to a singular objective but can unfold to reveal profound insights that challenge established paradigms.

The “Arrow of Time” research not only advances our understanding of AI language models but also prompts a reevaluation of how we interpret language and intelligence through the lens of time. This comprehensive study emphasizes the need for continual exploration within the AI domain, opening avenues for future research that could redefine both technological and philosophical discussions surrounding language and cognition.