Deep learning has emerged as a transformative technology across various sectors, ranging from health care diagnostics to financial modeling. Its effectiveness relies on processing vast amounts of data, often necessitating the use of powerful cloud computing resources. However, this dependence on cloud infrastructure raises significant privacy and security concerns, especially in sensitive fields like health care, where patient confidentiality is paramount. Researchers at MIT have sought to address these vulnerabilities by developing a novel security protocol that employs quantum principles to safeguard data during deep learning processes.

The Dilemma of Cloud Computing in Sensitive Fields

As deep learning models, particularly advanced ones like GPT-4, become increasingly sophisticated, they require extensive computational power. Consequently, many organizations turn to cloud services, which can handle the computational load. In medical settings, for instance, hospitals may hesitate to leverage AI for analyzing patient data due to fears surrounding data breaches and privacy infractions. The emerging tension between the utility of deep learning and the need for stringent data confidentiality has put organizations in a difficult position. Without solutions, the full potential of AI in critical sectors remains out of reach.

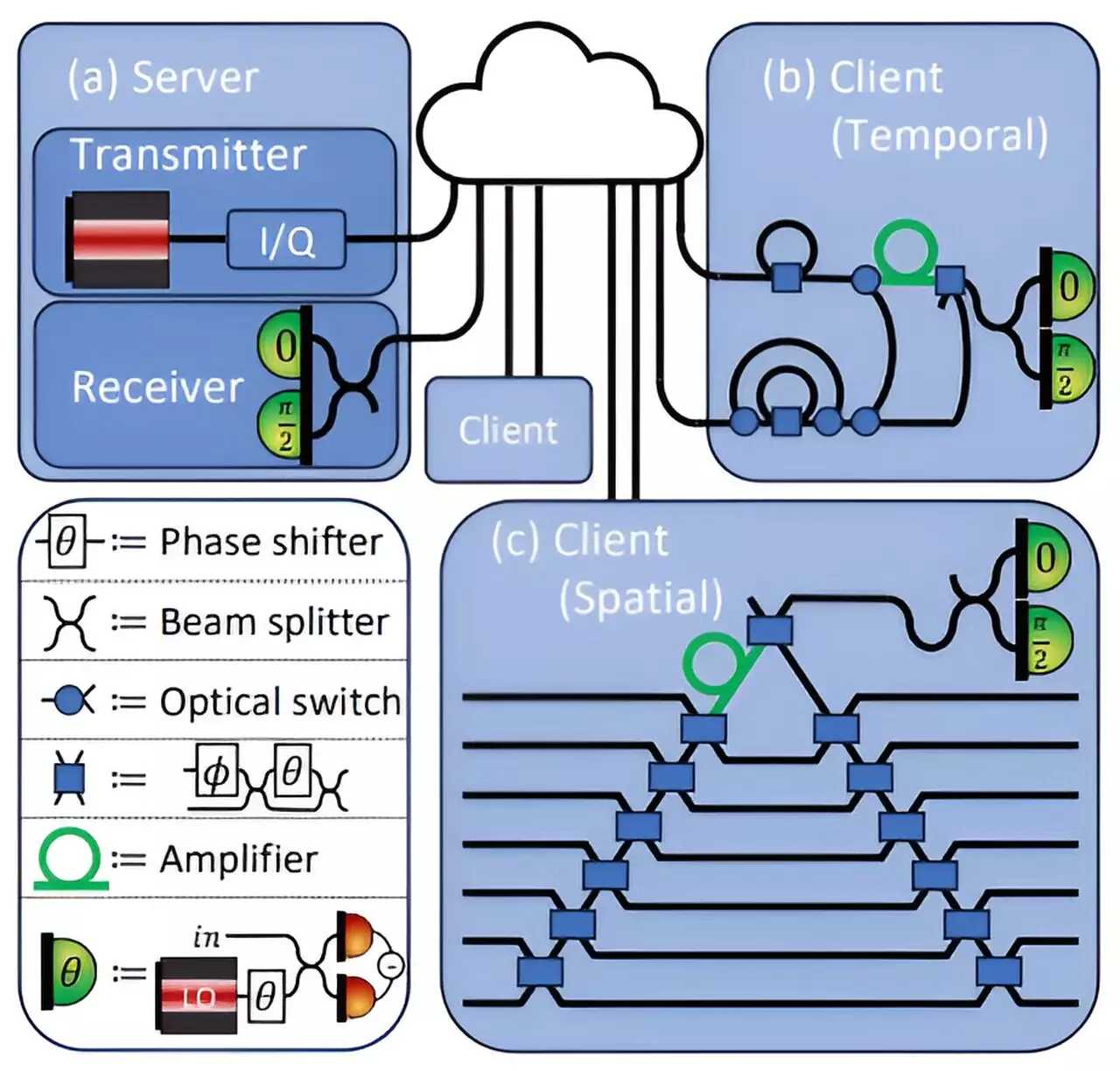

To confront these challenges, MIT researchers have developed a groundbreaking security protocol that utilizes the quantum properties of light to protect sensitive information during deep learning computations. The innovation lies in encoding confidential data into laser light utilized in fiber optic communications, thus providing a robust defense against potential data breaches. By tapping into fundamental principles of quantum mechanics, the methodology ensures that information cannot be intercepted or copied by unauthorized entities—an assurance that is vital for both patients and proprietary algorithms.

One of the hallmark features of the MIT protocol is that it achieves an impressive balance between security and model accuracy. During experimental validations, researchers reported that the protocol significantly maintained a 96% accuracy rate while ensuring that sensitive data remained uncompromised. Kfir Sulimany, the lead author of the paper detailing this work, highlighted the efficacy of the solution: “Our protocol enables users to harness these powerful models without compromising the privacy of their data.”

In this secure setup, the research team established a scenario where a client—holding sensitive data, such as medical images—interacts with a central server running a deep learning model. The challenge lies in acquiring predictions from the model without disclosing sensitive patient information. The brilliance of the quantum protocol lies in its design: it allows the client to conduct operations on their private data while simultaneously shielding it from the server’s view.

The researchers leveraged the no-cloning theorem—a fundamental principle in quantum mechanics that dictates that certain information cannot be perfectly copied. In their innovative approach, the weights of the neural network, which represent the model’s learned parameters, are embedded into the quantum states of light. As the server transmits this data, the client performs operations to derive predictions, but the client can only access information necessary for immediate computations, preventing them from gleaning additional insights into the model.

In a practical sense, this means that the client is only able to analyze specific elements of the data. Once the computations are made, any information that might be inadvertently revealed is minimalized. Sulimany elaborates, stating that the protocol is designed meticulously to ensure that even if the client performs operations on the data, the resultant residual light sent back to the server contains no personal information about the client.

Looking ahead, researchers express interest in extending the application of their proposed security protocol to federated learning—a collaborative model training framework that allows multiple parties to contribute their data without exposing it. Furthermore, they foresee potential enhancements in accuracy and security if applied to quantum operations. As the landscape of artificial intelligence and quantum cryptography continues to evolve, the fusion of these two domains presents an exciting frontier for ensuring the privacy of sensitive information.

The implications of this research are profound, particularly as digital infrastructures grow more complex and interconnected. With increasing concerns about data breaches and privacy violations, solutions that integrate quantum security for deep learning systems could pave the way for more robust and trustworthy applications in health care and beyond.

This pioneering work from MIT represents a significant breakthrough in the quest for secure cloud-based deep learning. By merging techniques from quantum physics and machine learning, researchers have devised a method that enhances the privacy of sensitive datasets while preserving model performance. As the digital age progresses, innovations like this could become essential in fostering trust and security in technology’s application, particularly in sectors where confidentiality is critical. As Kfir Sulimany aptly summarizes, the dual commitment to security and accuracy signifies a promising shift in how sensitive data can be handled in the cloud computing landscape.