In the rapidly evolving landscape of enterprise artificial intelligence (AI), the intricate task of integrating valuable data into large language models (LLMs) stands at the forefront. The recent announcements from AWS at the 2024 re:Invent conference unveil a robust solution designed to streamline this process through a methodology known as Retrieval Augmented Generation (RAG). By addressing the challenges associated with both structured and unstructured data, AWS is positioning itself as a pivotal player in facilitating enterprise AI implementations, ultimately enabling businesses to unlock the full potential of their data resources.

Retrieval Augmented Generation (RAG) acts as a bridge between data retrieval and generative processes, allowing for a more nuanced and context-rich interaction with AI systems. While this technique has been effective in leveraging text data, recent challenges have surfaced concerning its applicability to structured data stored in data lakes and warehouses. AWS’s VP of AI and Data, Swami Sivasubramanian, acknowledges that the intricacies of transitioning from unrefined data to actionable insights present substantial hurdles. The underlying problem is not just about retrieving individual data points but rather translating complex natural language queries into sophisticated SQL commands capable of filtering, aggregating, and joining various data tables.

The readiness of structured data for RAG applications continues to be a critical concern for enterprises looking to harness AI’s capabilities effectively. Sivasubramanian explains that a deep understanding of data schemas and historical query logs is essential. Enterprises face the dual challenge of maintaining data accuracy and security while keeping pace with ever-evolving data structures. The introduction of AWS’s Amazon Bedrock Knowledge Bases service aims to tackle these complexities head-on by providing a fully managed RAG framework that automates the data integration process. The service removes the need for custom code, allowing organizations to focus on generating relevant responses from their data.

With the new capabilities of the Amazon Bedrock Knowledge Bases, users can directly query structured data, resulting in enriched outputs for generative AI applications. This automated workflow adjusts dynamically to changing data schemas and incorporates learned query patterns to increase accuracy. By facilitating easy access to structured data, AWS empowers enterprises to create more intelligent and responsive generative AI systems, thereby driving innovation and operational excellence.

Another significant advancement in AWS’s toolkit is the introduction of GraphRAG, a capability aimed at enhancing the accuracy of AI systems by elucidating the connections between disparate data sources. Knowledge graphs play a pivotal role in this structure, illustrating relationships among various datasets. Sivasubramanian highlights that these graphs, when translated into embeddings within generative AI applications, allow for a cohesive understanding of customer data. AWS Streamlines this process by integrating the Amazon Neptune graph database service, which automatically creates visual representations of data relationships. As a result, enterprises can build comprehensive AI applications without requiring extensive graph expertise.

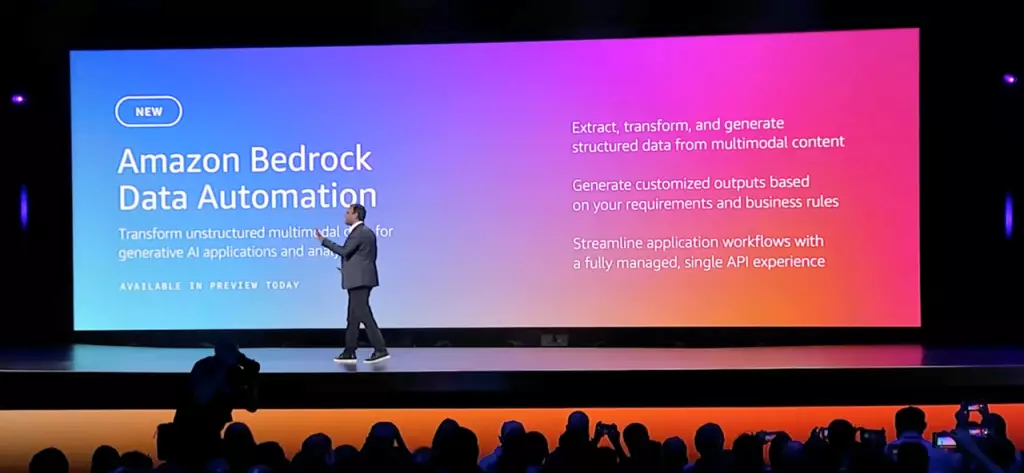

The obstacles posed by unstructured data are formidable, encompassing everything from PDFs to multimedia content. Many organizations struggle to make this information actionable within RAG frameworks. Sivasubramanian stresses the necessity of transforming and processing unstructured data to enhance its utility. In response to this challenge, AWS has introduced Amazon Bedrock Data Automation—an innovation that promises to revolutionize how unstructured data is leveraged. This feature facilitates a seamless ETL (Extract, Transform, Load) process, converting diverse content types into structured formats suitable for generative applications. By utilizing a single API, enterprises can tailor outputs in alignment with existing data schemas, making it easier to extract meaningful insights.

With these enhancements, AWS is not only addressing the technical challenges of integrating diverse data types into RAG but also fostering an environment where enterprises can more effectively utilize their informational assets. Sivasubramanian’s assertions reflect a larger narrative within the tech industry’s continuous drive toward refining data utilization methods, thereby empowering organizations to develop AI capabilities that are contextually relevant and performance-driven.

The suite of tools announced at AWS re:Invent 2024 symbolizes a significant leap forward in making enterprise data more accessible and manageable, laying the groundwork for innovative solutions that meet the demands of modern business environments. As companies increasingly rely on data-driven strategies for decision-making, AWS’s focus on streamlining data integration for generative AI will prove invaluable.