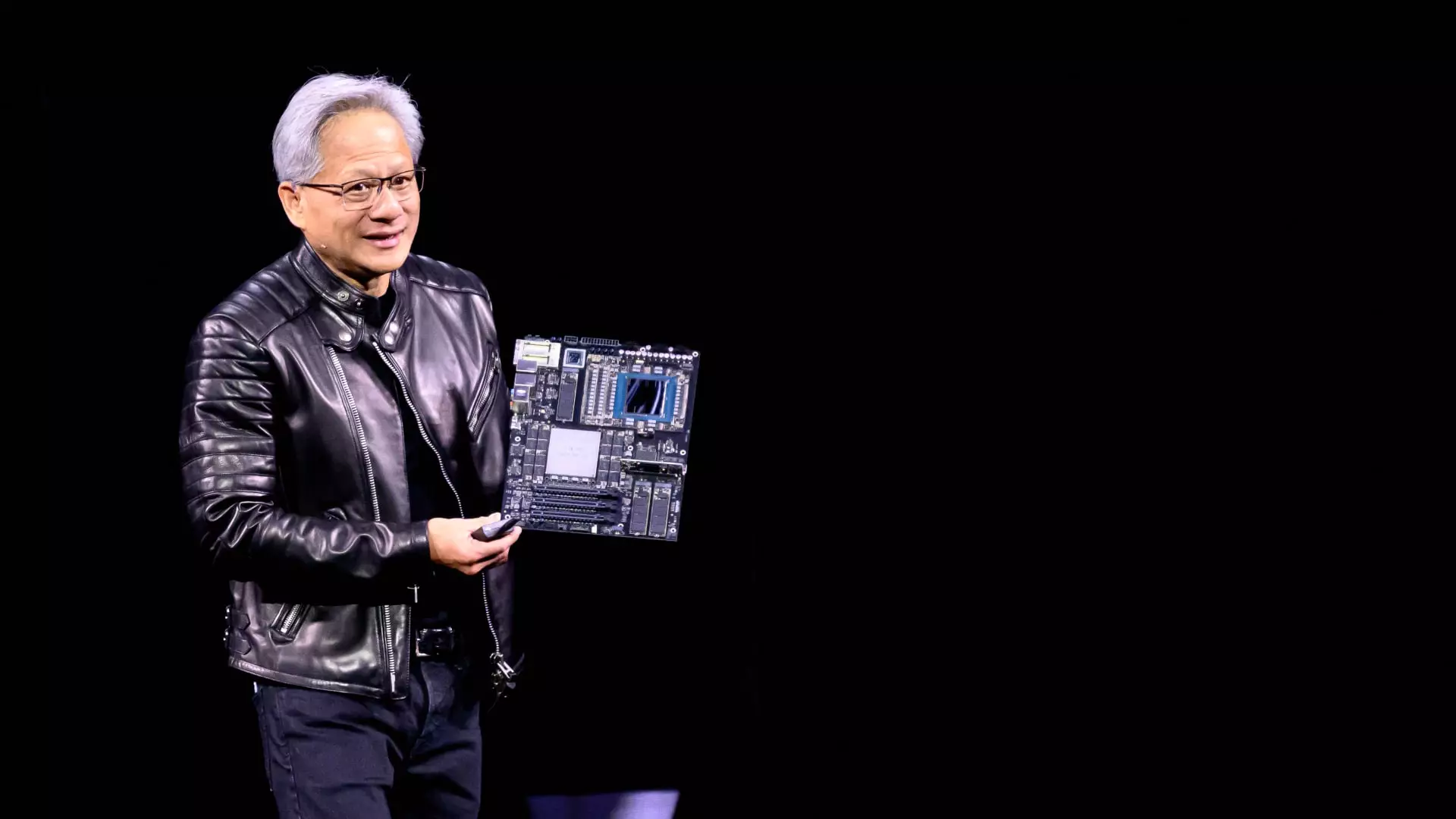

At the heart of Nvidia’s astonishing growth lies an unwavering commitment to speed—a critical factor that CEO Jensen Huang emphasized during his recent keynote at the GTC conference. As he passionately laid out the virtues of faster chips, it became painfully clear that the future of artificial intelligence (AI) hinges on the capacity to deliver high-performance processing power at scale. The dynamic nature of AI applications means that responsiveness and efficiency are not merely luxuries; they are necessities. Huang’s enthusiasm encapsulates a bold foresight: the faster the chips, the more significant the impact on cost and efficiency in cloud computing.

Huang’s rationale is compelling: the concept of speed as a paradigm for cost-reduction resonates particularly well as organizations prepare for massive investments in AI infrastructure. The recent projections indicate that several hundred billion dollars will rapidly plunge into developing data centers, all with an eye on leveraging Nvidia’s cutting-edge technology. What becomes apparent is that companies are not merely dreaming of enhanced capabilities; they are already laying the groundwork to realize them.

The Financial Mathematics Behind Advanced Chips

During his keynote, Huang dove deep into the financial rationale of adopting Nvidia’s fastest chips, explaining how the economics of performance can deliver extensive returns on investment. He even engaged the audience with ‘envelope math’, which, although informal, delegitimizes the complexity often associated with these discussions. The cost-per-token metric is ingenious; it illustrates how much it costs to produce a unit of AI output and offers critical insight for hyperscale cloud operators contemplating their investment choices.

The projections for Nvidia’s Blackwell Ultra systems suggest a staggering potential revenue surge—50 times more than what its predecessor, the Hopper system, could manage. Here, one must appreciate the profound implications of Huang’s assertion that speed will not just offset costs but redefine the operational capacity of cloud services. In an era where information and data processing speed are synonymous with competitive advantage, this projection should leave industry players eager to align themselves with Nvidia’s trajectory.

The Cloud Providers’ Dilemma: Cost vs. Customization

Nvidia’s dominance raises questions about the viability of custom solutions developed by major cloud giants like Microsoft, Google, Amazon, and Oracle. Huang was quick to dismiss the premise that proprietary chips could pose a real threat, citing concerns about flexibility and adaptability in rapidly evolving AI algorithms. In his view, the potential pitfalls of custom-designed hardware that lacks the versatility to keep pace with disruptive technology developments highlight an inherent risk that many cloud providers might overlook.

Moreover, his skepticism about the success of upcoming application-specific integrated circuits (ASICs) adds another layer of intrigue. The underwhelming history of ASIC projects serves as a cautionary tale, and Huang’s confidence in Nvidia’s capacity to deliver superior performance casts a shadow over any competitive aspirations that these emerging technologies might harbor.

The Vision for Tomorrow’s AI Infrastructure

It is evident that Nvidia not only understands the present landscape of AI chip technology, but it also possesses a clear vision for the future. The announcement of the roadmap for upcoming chips like the Rubin Next and Feynman AI chips signals that the company is not merely reacting but actively leading in setting the pace for the industry. As companies strategize for multi-year rollouts, knowing what lies ahead in the Nvidia pipeline becomes a vital element in their decision-making processes.

Huang’s message is clear: the question isn’t whether to invest in Nvidia technology; rather, it is about making significant investments in a future marked by transformative AI capabilities. As organizations secure budgets, power access, and land for infrastructure, it’s crucial to be aligned with a partner equipped to deliver the necessary speed and performance.

Overall, Huang’s articulate insights at the GTC conference paint a compelling picture—not just of Nvidia’s immediate offerings but of a broader paradigm shift in AI computing. The technological landscape is under siege from demands for speed, efficiency, and robust performance. As corporations grapple with these shifting dynamics, it’s impossible to overlook the strategic advantage that comes from choosing to invest in Nvidia’s next-generation chips.