The ascent of large language models (LLMs) in the world of artificial intelligence has been nothing short of remarkable. These expansive systems, boasting hundreds of billions of parameters, rely on intricate data connections refined through extensive training. While their size enables them to discern complex patterns and produce highly accurate outputs, this tremendous power comes with a hefty price tag. Google’s investment of $191 million for training the Gemini 1.0 Ultra model highlights the astronomical costs associated with these behemoth models. Beyond financial implications, the computational demands of LLMs have made them notorious for consuming vast amounts of energy. A single query to a service such as ChatGPT can devour ten times the energy required for a single Google search, raising significant sustainability concerns.

The industry is at a crossroads. As we moved toward an era defined by increasingly larger models, there is a palpable shift towards a new frontier: small language models (SLMs). With substantial initial investments in LLMs yielding diminishing returns in some contexts, researchers and developers are now exploring how smaller, specialized models can provide effective solutions without the intensive resource consumption endemic to their larger siblings.

The Rise of Small Language Models

Small language models, typically containing just a few billion parameters, are emerging as practical alternatives to LLMs. While these models may lack the expansive capabilities of their larger counterparts, they excel in niche applications like responding to specific queries in healthcare chatbots or summarizing conversation threads. Zico Kolter, an esteemed computer scientist at Carnegie Mellon University, asserts that for many straightforward tasks, an 8 billion-parameter model suffices, if not excels.

One of the most compelling aspects of SLMs is their operational flexibility. They can function seamlessly on devices as modest as laptops or smartphones, making cutting-edge AI technology accessible beyond the confines of massive data centers. This democratization of AI demonstrates a fundamental shift toward more efficient and user-friendly applications.

Innovative Techniques Enhancing Efficiency

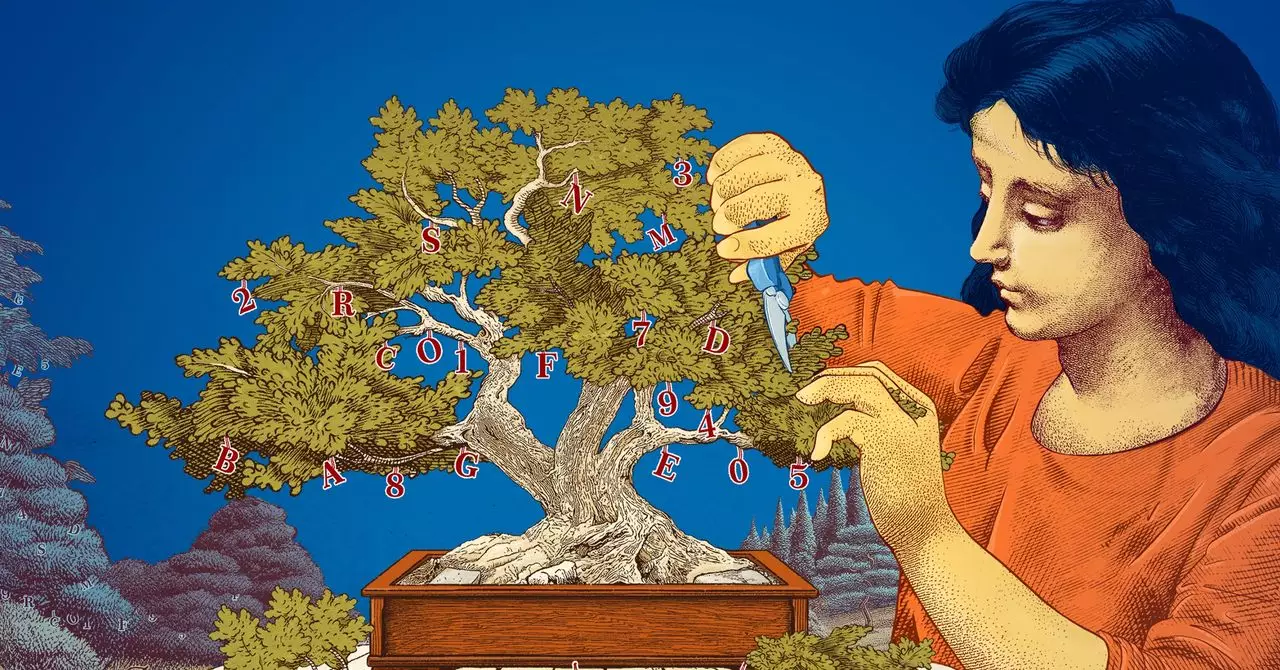

The path to optimizing performance for SLMs involves several groundbreaking techniques. Principal among these is knowledge distillation. This approach mirrors a teacher-student dynamic wherein a larger model imparts insights gained from vast, often messy datasets to a smaller model that processes this distilled, high-quality information. Kolter points out that such avenues empower SLMs to achieve remarkable results with limited data, working smarter rather than harder in metaphorical terms.

Another innovative method is pruning. This technique, drawn from the natural efficiency of the human brain akin to synaptic pruning, allows researchers to eliminate unnecessary connections from neural networks. The idea, initially proposed by Yann LeCun in 1989, suggested that up to 90% of a neural network’s parameters could be removed without a notable drop in performance. This “optimal brain damage” concept has profound implications for enhancing model efficiency and tailor-fitting SLMs for specific tasks or environments.

Empowerment through Transparency and Experimentation

In an era where transparency in AI systems is paramount, small language models offer unique advantages. Because they feature a far fewer number of parameters than LLMs, the reasoning behind their decisions can be easier to trace and comprehend. Such clarity fosters a better understanding of the underlying algorithms, which is crucial for researchers who want to explore new ideas and methodologies without the high stakes associated with launching large-scale models.

Leshem Choshen of MIT-IBM Watson AI Lab accentuates the importance of small models for experimental purposes, noting that researchers can probe and test innovative ideas in a risk-averse environment. This means that rather than embarking on costly and high-stakes ventures with LLMs, the research community can gain critical insights through the simpler, more manageable framework that SLMs provide.

The Future is Small and Purposeful

Despite the tremendous capabilities housed within large models, it is increasingly evident that small, targeted models hold their own in many contexts. For tasks requiring precision and efficiency without unnecessary complexity, SLMs can deliver exceptional performance while conserving resources—both financial and ecological. They embody a vital trend in the AI landscape that prioritizes purpose over sheer size. As researchers and tech companies embrace this evolution, the shift toward smaller models signifies an exciting chapter in artificial intelligence, one where efficiency and sustainability become the core of innovation. The future may indeed belong to the small, purposeful models that meet the distinct and varied needs of users across the globe.