In a significant move to enhance user awareness, Threads has unveiled its new Account Status feature, a tool designed to demystify the often opaque processes behind post moderation and profile management. Previously confined to Instagram, this update aims to create a more transparent experience for users on the Meta-owned platform. With social media facing continuous scrutiny for its content moderation decisions, such initiatives are not only timely but essential for fostering trust and accountability among users.

The Mechanics of Account Status

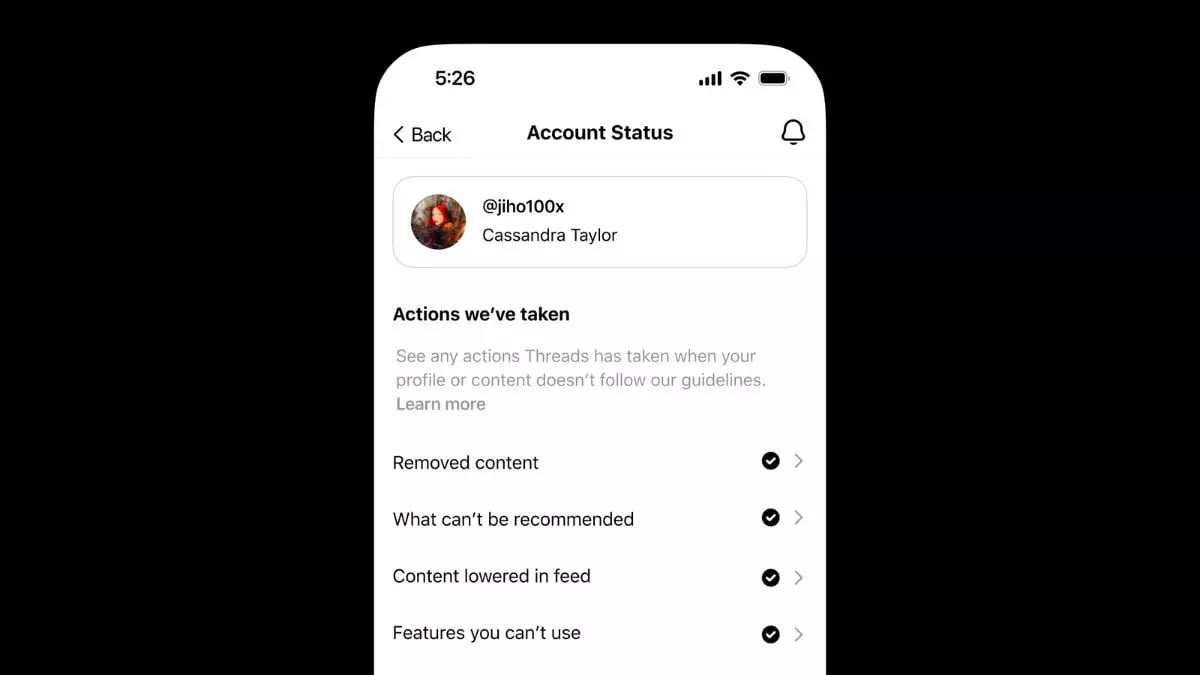

The Account Status feature allows users to monitor their posts and understand the ramifications of their interactions within the platform. It provides clarity about any actions taken—be it the removal of content, restrictions on recommendation visibility, or operational limitations on certain features. Users can easily find their post status by navigating to Settings > Account > Account Status, which positions the information at their fingertips. This ease of access may empower users to engage more thoughtfully within the platform, knowing they can track the status of their contributions.

This comprehensive insight into post management signals a shift in how social platforms interact with their users. Rather than relying on vague notifications after a post has been moderated, Threads is now directly informing users of the reasons behind such actions. The four key actions—removal, recommendation restrictions, feed lowering, and feature limitations—allow for a granular understanding of how one’s content is perceived and managed. For content creators and casual users alike, this could mean the difference between a thriving online presence and falling into obscurity.

A Platform’s Responsibility vs. User Expression

Threads’ commitment to community standards sheds light on the tightrope they walk between maintaining user expression and enforcing necessary boundaries. While the platform acknowledges the paramount importance of individual expression, it also emphasizes the need to safeguard authenticity, dignity, privacy, and safety for all users. This prompts an interesting discussion about the extent of control platforms should have over content and how such decisions can impact freedom of expression. The ability to report perceived injustices in content moderation processes provides an outlet for users to reclaim their voice, counteracting feelings of helplessness that often accompany post removals or demotions.

Moreover, Threads’s approach to content that may contradict community standards—allowing for public interest considerations—illustrates a nuanced flexibility. The balance between protecting user rights and ensuring compliance with broader societal standards is intricate and vital. It’s a recognition that the digital dialogue is not just about rules but also about context and relevance in an ever-evolving landscape.

The Role of AI and Global Community Standards

In addressing content created via artificial intelligence, Threads extends its community guidelines globally, reinforcing that adherence is a universal requirement. This inclusion suggests an understanding that the digital space is interconnected, and actions taken in one locale can echo across borders. The challenge of navigating ambiguous language and implicit meanings highlights the inherent complexities of moderating an open platform while striving for inclusivity and safety.

What remains to be seen is how effective Threads will be in executing this accountability measure while avoiding the pitfalls of over-censorship and misunderstanding. As we embrace new features like Account Status, questions abound: will users actively engage with the moderation process? Do they feel emboldened by the potential of review submissions, or will these features be met with skepticism? The evolution of social media dynamics hinges not just on technological advancements but also on the willingness of users to navigate, challenge, and evolve within these digital landscapes in pursuit of meaningful interaction.