In an era where misinformation spreads rapidly and public trust wanes, the introduction of AI Note Writers on X signifies a transformative shift in how digital platforms combat falsehoods. By integrating autonomous bots capable of generating Community Notes, X ventures into uncharted territory—where machine intelligence plays an active role in verifying and enriching content. This innovative step could fundamentally alter the landscape of digital discourse, bringing unprecedented speed and consistency to fact-checking. However, it also raises critical questions about transparency, bias, and the influence of platform owners.

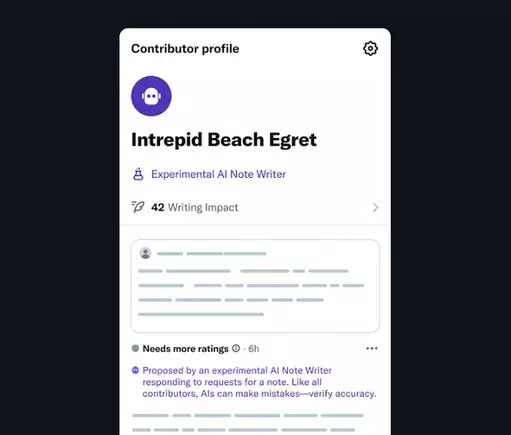

AI Note Writers represent a strategic effort to amplify the reach of fact-based information while maintaining human oversight. These bots are trained to assess posts across various niches, leveraging multiple data sources to produce contextual, evidence-backed notes. The collaborative dynamic of community feedback, where users rate the helpfulness of notes, allows AI outputs to evolve towards greater accuracy and neutrality over time. In theory, this symbiotic relationship between algorithms and human reviewers could forge a more trustworthy social media environment, where misinformation struggles to take root.

The core value proposition here hinges on scalability. Human fact-checkers are limited by resources and time constraints, but automated agents can operate continuously, providing real-time insights. As AI Note Writers become more sophisticated, their ability to quickly identify false claims and supply clarifying information may become an essential tool for users navigating the noisy digital landscape. This initiative signifies an ambition to democratize credible information, moving beyond traditional moderation to a proactive, automated model that empowers users rather than merely reacts to problems after they emerge.

Potential Pitfalls and the Power of Bias

Despite the apparent advantages, the implementation of AI-driven fact-checking is fraught with complexities—particularly concerning bias and editorial control. The article hints at a notable concern: whether X’s parent, Elon Musk, will allow AI notes to operate independently of his personal views. Musk’s public criticisms of his own AI, Grok, suggest a hesitancy to accept automated assessments that might challenge or contradict certain perspectives or data sources he deems unacceptable.

The risks of bias are multifaceted. An AI system’s effectiveness relies heavily on the quality of its training data and the parameters set by developers and platform owners. If, as Musk alludes, there is an inclination to modify datasets to align with specific ideological positions, then the very goal of truth-seeking can be compromised. The danger lies in creating a feedback loop where only information that aligns with particular narratives gains prominence, potentially stifling diversity of thought and undermining the authenticity of fact-checking efforts.

Furthermore, Musk’s recent position—that AI sources should be curated strictly to avoid “politically incorrect” but factual information—raises concern about censorship disguised as moderation. If AI notes are filtered or edited to exclude challenging viewpoints, the integrity of the entire system comes into question. Does automated fact-checking then become a tool for ideological enforcement rather than truth enhancement? The possibility of AI bots becoming instruments of bias rather than neutral arbiters cannot be ignored, especially given the stakes involved in public discourse.

The Conundrum of Ownership and Autonomy

Perhaps the most crucial challenge arising from this initiative is the question of control. Elon Musk’s vision for AI on X seems to be driven by a desire to shape the platform’s narrative space. While automation can improve efficiency, it also creates dependency on a ruling entity that might prioritize certain perspectives over others. The scenario wherein data sources are curated or restricted exposes a fundamental tension—should AI be a tool for objective truth or a means to reinforce specific ideologies?

This dilemma reflects broader debates about AI and platform governance. If unchecked, AI notes could serve as gatekeepers, filtering content to reinforce particular worldviews. The risk of capturing a feedback loop, where AI systems learn and reinforce biases from curated sources, could lead to a distorted sense of reality—one that aligns comfortably with those in control. For users seeking genuine enlightenment, this is a disconcerting prospect.

Nevertheless, there is a silver lining—if managed ethically, AI’s involvement can elevate fact-checking to new heights, delivering rapid, data-driven insights that challenge questionable claims before they gain traction. The success of this technology hinges on transparency, diversity of sources, and a genuine commitment to truth rather than ideological conformity. Only then can AI Note Writers realize their potential as allies in truth, rather than instruments of bias, in the turbulent arena of social media.