Have you ever found yourself scrutinizing the fresh produce aisle, particularly the apples, wondering which ones are truly the best? This common dilemma highlights a broader challenge in the realm of food quality assessment—a challenge that current machine-learning models are struggling to address effectively. While these models boast the potential for high efficiency, they often lack the nuanced understanding that comes from human perception, which can adapt to various environmental cues. A recent study published in the Journal of Food Engineering offers intriguing insights that might pave the way for applications, such as an innovative mobile app for consumers and improved techniques for retailers.

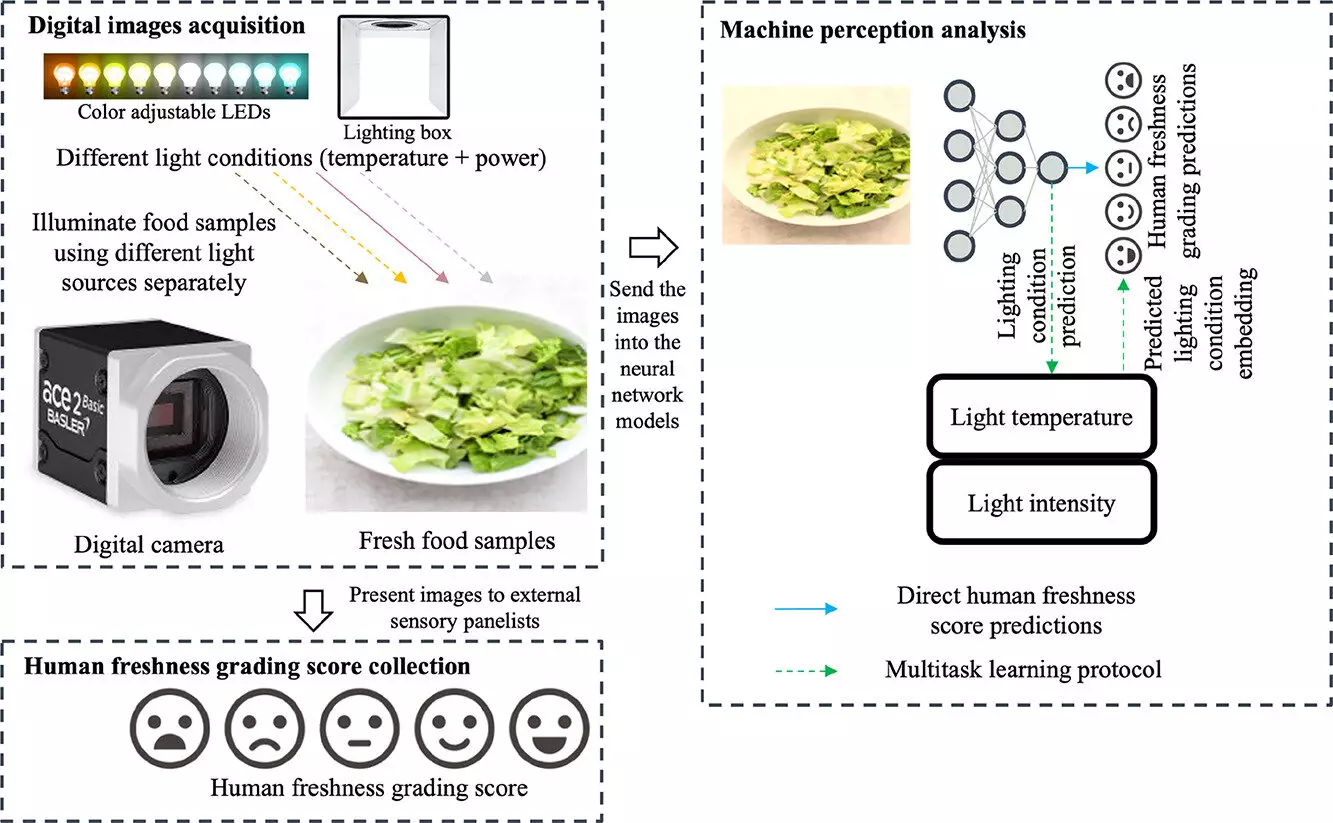

Led by Dongyi Wang, an expert in smart agriculture and food manufacturing, this significant research was conducted at the Arkansas Agricultural Experiment Station. Wang’s team explored the relationship between human perceptions of food quality and machine-learning predictions. Their work suggests a possibility of creating algorithms that not only mimic human evaluations but also enhance their predictive reliability under a variety of lighting conditions. Traditional machine-learning models have often overlooked the variability in human perception, relying primarily on basic color recognition or simplistic human labeling techniques.

In their study, the researchers focused on Romaine lettuce as a test case for their experiments. They implemented sensory evaluations involving 109 participants who assessed the quality of lettuce images systematically over several sessions. Participants rated the images based on freshness using a scale that ranged from zero to 100, providing a rich dataset for analysis.

One of the notable findings from this research was the substantial improvement in machine-learning accuracy when human perception data was incorporated. The study demonstrated that the predictive errors in computer analysis could be minimized by approximately 20% when adjusted for the variations in human assessments. By training models on this enriched dataset, the researchers aimed to develop algorithms that are not only more consistent but also more attuned to the complexities of visual assessment.

The researchers gathered data over eight days, capturing 675 images of Romaine lettuce under different lighting conditions and varying levels of browning. The goal was to create a comprehensive dataset that reflects how environmental factors can alter human perception. It was discovered that certain lighting—particularly warmer tones—could mask visible defects, like browning, thus providing skewed assessments. This manipulation of perception through illumination underscores the intricate dance between human sensory experiences and machine learning capabilities.

The implications of Wang’s study extend far beyond just lettuce. The methodology developed for incorporating human perception into machine-learning models can significantly benefit various fields, from food evaluation to more aesthetic evaluations, such as jewelry or apparel. Wang suggests that the approach is versatile, opening opportunities for machine learning to penetrate industries centered around both consumer products and quality control processes.

Moreover, this research provides essential insights for grocery stores and manufacturers about how to present products attractively. By understanding how lighting and display affect consumer perception of freshness, businesses can modify their marketing strategies effectively. For instance, specific lighting choices can enhance the visual appeal of produce and potentially increase sales.

The research led by the Arkansas Agricultural Experiment Station offers a beacon of hope for bridging the gap between human sensory perception and machine learning. This dual approach can not only refine the techniques used in food quality assessment but can also redefine consumer experiences in grocery shopping. As applications for this technology unfold, we may soon find ourselves equipped with an app that allows us to select the freshest produce with confidence, fundamentally transforming our shopping habits. By advancing machine-learning models that better encapsulate human perception, we are stepping into a future where technology and sensory evaluation coalesce seamlessly, enhancing our understanding of food quality in a visually-driven consumer environment.