In the ever-evolving landscape of artificial intelligence, particularly in the domain of large language models (LLMs), the quest for improved accuracy and efficiency is paramount. A recent breakthrough from researchers at MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) proposes a novel approach for LLM collaboration, termed Co-LLM. By simulating human-like teamwork, this algorithm significantly enhances the capabilities of general-purpose language models through strategic partnerships with specialized models. This article delves into the mechanics, benefits, and implications of Co-LLM, showcasing how it could redefine the future of AI-generated responses.

Understanding the Need for Collaboration

Most people have experienced moments where they grasp only part of the answer to a complex question. In such instances, reaching out to someone with expertise can provide clarity and depth. Similarly, LLMs can struggle with generating accurate responses, particularly in highly specialized fields like medicine or mathematics. While traditional methods of improving LLM accuracy often involve extensive labeled datasets and intricate algorithms, the CSAIL team sought a more intuitive solution—one that organically facilitates inter-model collaboration without overwhelming complexity.

Co-LLM addresses this need by pairing a general-purpose LLM with specialized counterparts. The unique aspect of this collaborative framework lies in its ability to identify instances where the general-purpose model lacks the requisite knowledge and appropriately defer to the specialized model. This realization is akin to the way humans instinctively know when to consult an expert, thereby enhancing the overall accuracy and efficiency of the AI’s responses.

The Mechanics of Co-LLM

At the core of Co-LLM lies a mechanism commonly referred to as a “switch variable,” a machine learning component that assesses the competence of each token in the response crafted by the general-purpose LLM. As the model constructs an answer, this switch evaluates each word’s accuracy and determines when to incorporate insights from the specialized model. For instance, if the task is to provide examples of extinct bear species, the general-purpose LLM might initially attempt to generate a response. However, when the switch detects that an expert-level detail—like the extinction year—is needed, it seamlessly incorporates that information from the specialized model.

This collaborative architecture not only improves response quality but streamlines the generation process by engaging the specialized model only when necessary, thereby conserving computational resources. The research team at MIT demonstrated that this approach leads to substantial performance gains compared to simple LLMs tuned for specific tasks independently.

To showcase Co-LLM’s capabilities, consider a scenario involving a general-purpose LLM tasked with identifying the components of a prescription drug. Without the aid of an expert model, the LLM may provide an incorrect answer. By utilizing Co-LLM and incorporating a specialized biomedical model, the accuracy of the response can be drastically improved. This advantage is vital in fields where precision is crucial, and misinformation can lead to severe consequences.

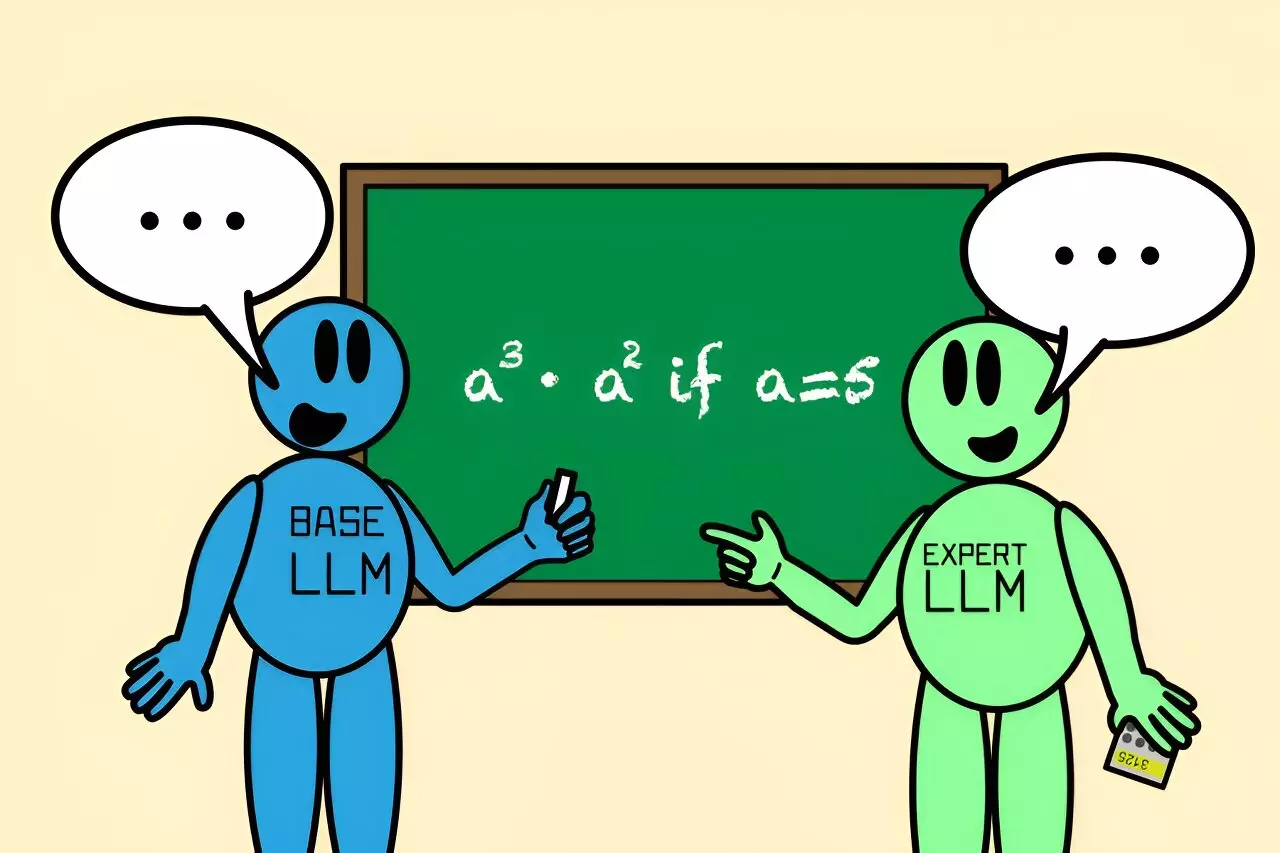

Furthermore, the researchers employed a mathematical challenge to emphasize Co-LLM’s prowess. In a particular case, the unassisted general-purpose model mistakenly calculated a product to be 125, while the collaboration with an advanced math LLM, dubbed Llemma, revealed the correct answer of 3,125. This example underscores the algorithm’s capability to not only enhance accuracy but also reinforce the significance of cooperative problem-solving across distinct fields.

The prospects for Co-LLM are expansive. The researchers envision a dynamic model that not only receives immediate benefits from expert insights but can also evolve based on new data. For instance, if an expert model provides a response that later proves incorrect, there is potential for Co-LLM to backtrack and offer a corrective solution, resembling the human tendency to reassess and refine answers based on ongoing learning.

Moreover, maintaining up-to-date information is essential for ensuring the relevance and accuracy of generated content. By making it possible for the base model to update its knowledge with the latest data, Co-LLM could provide more comprehensive insights, bridging the gap between static knowledge bases and the dynamically evolving landscape of information.

The Co-LLM framework represents a significant leap forward in the realm of AI, particularly in the use of LLMs for complex problem-solving across various domains. By mimicking the natural collaboration that occurs among human experts, Co-LLM not only enhances the performance of LLMs but also sets the stage for a new understanding of how artificial intelligence can work together. As researchers continue to refine this model, we may witness a future where LLMs not only generate impressive outputs but do so with an accuracy and resource efficiency akin to human collaboration.