In an increasingly digital world, user privacy has become a hot topic, especially with technology companies like Meta (formerly Facebook) pushing the envelope on artificial intelligence integration. Recently, Meta introduced an AI feature across its messaging platforms, including Facebook Messenger, Instagram, WhatsApp, and others, prompting users to consider the implications of this technology on their private conversations. This move has sparked a myriad of concerns and discussions surrounding user privacy, data security, and the ethical use of AI.

The new AI capabilities allow users to interact with Meta’s artificial intelligence, known as Meta AI, within their chats. Users can summon the AI by mentioning it in a conversation, allowing them to ask questions and receive immediate responses. According to Meta, this engagement aims to provide users with quick information to enhance their chatting experience. However, this feature comes with a caveat: any dialogue that occurs in chats where Meta AI is invoked may become part of the data pool used for AI training, potentially compromising user privacy.

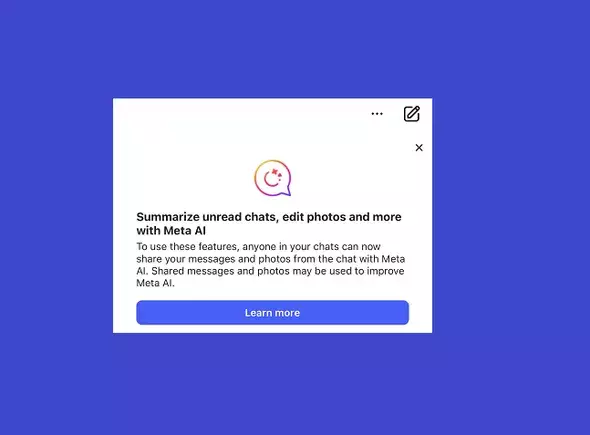

Meta has issued a pop-up notification within its apps that warns users about these risks, advising them to be cautious when sharing sensitive information, such as passwords or financial data, in conversations where they’ve utilized Meta AI. This attempt to inform users seems to be a double-edged sword—it raises awareness but also draws attention to the inherent risks of sharing personal information in a digital landscape that is seldom secure.

At the heart of this situation lies the paradox of privacy in the age of digital communication. Users often overlook the terms and conditions they agree to, leaving them vulnerable to privacy invasions. Despite Meta’s warning, the practical realities of utilizing Meta AI within chats present a significant dilemma. The very feature designed to enhance conversations becomes a new avenue for potential data exploitation.

While Meta insists it takes measures to anonymize data before using it for AI training, the reality remains that anonymization is not foolproof. The fear that sensitive information may eventually be revived or misused looms over those who wish to engage with Meta AI. Most users may not fully grasp the extent of data manipulation that can occur, leading to inadvertent disclosures in what was previously perceived as private communication.

Meta’s solution to mitigate these concerns offers limited empowerment to users. They suggest avoiding Meta AI in chats if sensitive information is discussed. This advice hints at a broader issue: the onus of protecting privacy often falls on the users, creating a landscape where users might feel paralyzed by fear of data exploitation. Users are left weighing the benefits of AI interaction against the risk of compromising their confidentiality.

The threat of personal information being used to fuel further AI development is concerning. Users are compelled to think critically about their online activities—not just in the context of Meta’s platforms but across all digital channels. This underlines the need for heightened awareness and caution, transforming the user experience into a constant balancing act between convenience and security.

As AI technologies become ingrained in daily communication tools, ethical considerations emerge regarding the role of companies like Meta in managing user data. Is it ethical for Meta to harvest data from user interactions in exchange for AI convenience? Additionally, companies must consider how they maintain trust with users. Fostering an environment of transparency and ethical data use should be paramount as they roll out features that impact user experience substantially.

Users deserve comprehensive information about how their data will be utilized, which raises the question: Should platforms like Meta provide more robust options for users to control their data beyond mere opt-out features buried in complex settings? Create frictionless pathways for user empowerment rather than further complicating an already complex digital landscape.

Meta’s new AI features in messaging applications have undoubtedly created an intriguing dynamic for both users and the company. However, as AI technology advances, the imperative to protect user privacy will become crucial. While the immediacy and convenience of AI may be appealing, the stakes surrounding personal data security demand that users remain vigilant. As individuals navigate these interactions, they must prioritize their privacy and remain informed, enabling them to make more educated choices in their digital communications. In the end, technology should enhance our lives without compromising our fundamental right to privacy.